How can we estimate relative rotation between images in extreme non-overlapping cases? (Hover over the images to reveal some implicit cues!)

Above we show two non-overlapping image pairs capturing an urban street scene (left) and a church (right). Possible cues revealing their relative geometric relationship include sunlight and direction of shadows in outdoor scenes and parallel lines and vanishing points in manmade scenes.

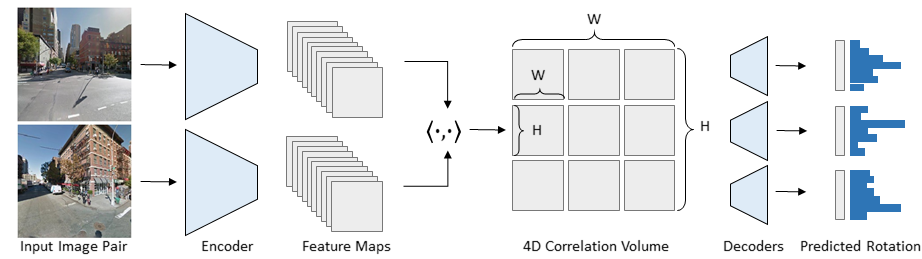

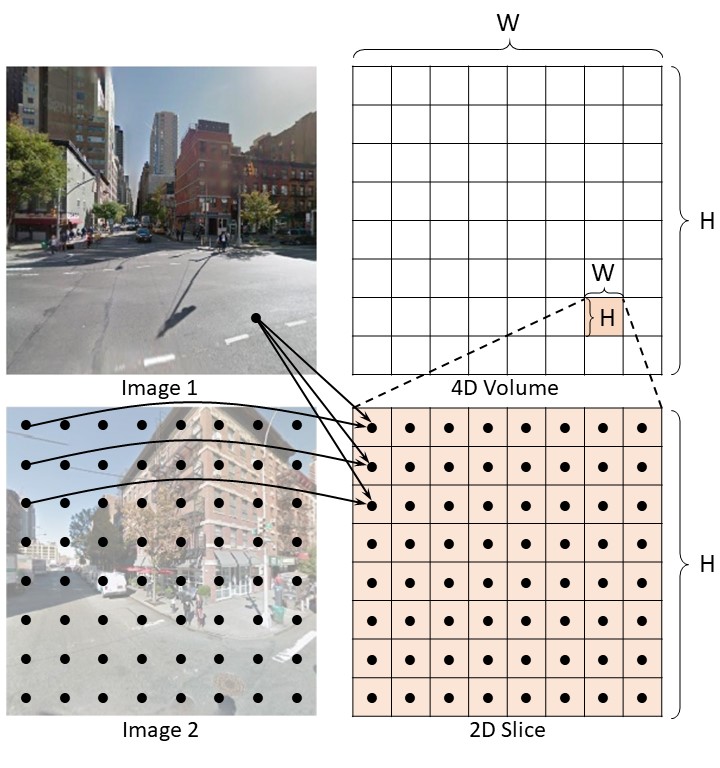

In this work, we present an approach for reasoning about such "hidden" cues for estimating the relative rotation between a pair of (possibly) non-overlapping images.